|

Aggregated Category

|

App Store Original Category

|

|

Apps

|

Accessories & Supplies, Activity Tracking, Alarms & Clocks, All-in-One Tools, Audio & Video Accessories, Battery Savers, Calculators, Calendars, Communication, Currency Converters, Customization, Device Tracking, Diaries, Digital Signage, Document Editing, File Management, File Transfer, Funny Pictures, General Social Networking, Meetings & Conferencing, Menstrual Trackers, Navigation, Offline Maps, Photo & Media Apps, Photo & Video, Photo Apps, Photo-Sharing, Productivity, Reference, Remote Controls, Remote Controls & Accessories, Remote PC Access, Ringtones & Notifications, Screensavers, Security, Simulation, Social, Speed Testing, Streaming Media Players, Themes, Thermometers & Weather Instruments, Transportation, Unit Converters, Utilities, Video-Sharing, Wallpapers & Images, Web Browsers, Web Video, Wi-Fi Analyzers

|

|

Beauty

|

Beauty & Cosmetics

|

|

Books

|

Book Info & Reviews, Book Readers & Players, Books & Comics

|

|

Business

|

Business, Business Locators, Stocks & Investing

|

|

Education

|

Education, Educational, Flash Cards, Learning & Education

|

|

Fashion & Style

|

Fashion, Style, Style & Fashion

|

|

Finance

|

Stocks & Investing

|

|

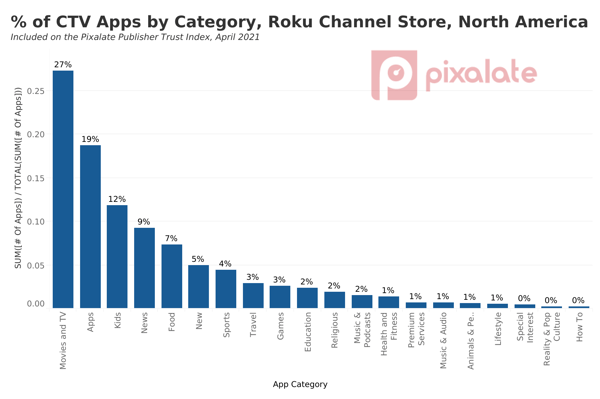

Food

|

Cooking & Recipes, Food, Food & Drink, Food & Home, Wine & Beverages

|

|

Games

|

Arcade, Board, Bowling, Brain & Puzzle, Cards, Casino, Game Accessories, Game Rules, Games & Accessories, Jigsaw Puzzles, Puzzles, Racing, Role Playing, Standard Game Dice, Strategy, Trivia, Words

|

|

Health and Fitness

|

Exercise, Exercise Motivation, Fitness, Health & Fitness, Health & Wellness, Meditation Guides, Nutrition & Diet, Workout, Workout Guides, Yoga, Yoga Guides

|

|

How To

|

Guides & How-Tos

|

|

Kids

|

Kids & Family

|

|

Lifestyle

|

Crafts & DIY, Furniture, Home & Garden, Hotel Finders, Living Room Furniture, Sounds & Relaxation, TV & Media Furniture, Wedding

|

|

Movies & TV

|

Action, Adventure, Cable Alternative,Classic TV, Comedy, Crafts & DIY, Crime & Mystery, Filmes & TV, International, Movie & TV Streaming, Movie Info & Reviews, Movies & TV, On-Demand Movie Streaming, On-Demand Music Streaming, Reality & Pop Culture, Rent or Buy Movies, Sci & Tech, Television & Video, Television Stands & Entertainment Centers, Top Free Movies & TV, TV en Español

|

|

Music & Audio

|

Instruments & Music Makers, MP3 & MP4 Players, Music, Music & Rhythm, Music Info & Reviews, Music Players, Portable Audio & Video, Songbooks & Sheet Music

|

|

New

|

New, New & Notable, New & Updated, Novelty

|

|

News

|

Celebrities, Feed Aggregators, Kindle Newspapers, Local, Local News, Magazines, New & Notable, News & Weather, Newspapers, Podcasts, Radio, Weather, Weather Stations

|

|

Premium Services

|

Most Watched, Top Paid, Featured, 4K Editors Picks, Just Added, Most Popular, Top Free, Recommended

|

|

Religious

|

Faith-Based, Religião, Religion & Spirituality, Religious

|

|

Sports

|

Baseball, Cricket, Extreme Sports,Tennis, Golf, Pool & Billiards, Soccer, Sports & Fitness, Sports Fan News, Sports Games, Sports Information

|

|

Travel

|

Travel Guides, Trip Planners

|

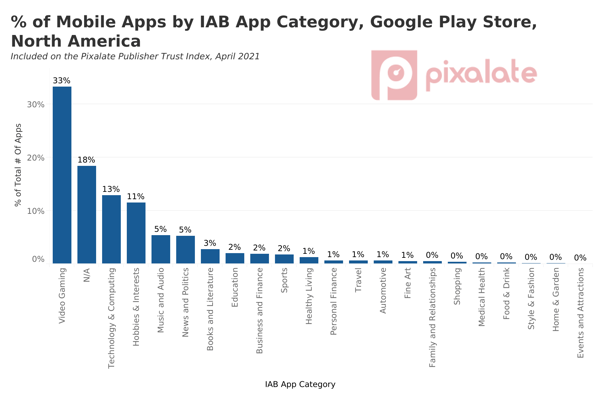

Category: This is the category of the publisher (e.g., News and Media, etc.). An “All” category is added to rank publishers across all the categories of a given app store. Categories are determined using the IAB taxonomy.

Category: This is the category of the publisher (e.g., News and Media, etc.). An “All” category is added to rank publishers across all the categories of a given app store. Categories are determined using the IAB taxonomy. The main columns used to identify a publisher are the “appId'' and the “title”. AppID is unique for a given app store. For every appId, the following metrics are produced, characterizing its score compared to other publishers:

The main columns used to identify a publisher are the “appId'' and the “title”. AppID is unique for a given app store. For every appId, the following metrics are produced, characterizing its score compared to other publishers:

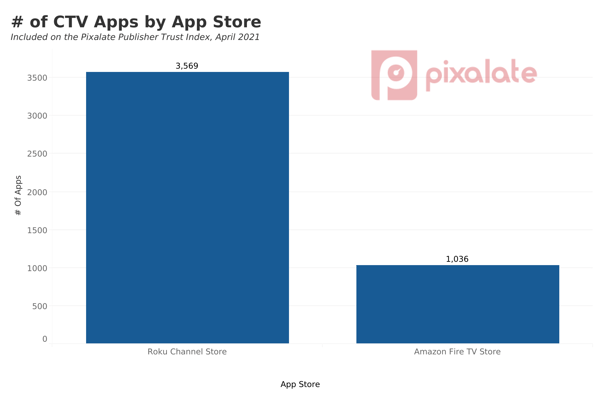

Metrics Used on the Publisher Trust Index for Roku Channel Store apps

Metrics Used on the Publisher Trust Index for Roku Channel Store apps Metrics Used on the Publisher Trust Index for Amazon Fire TV apps

Metrics Used on the Publisher Trust Index for Amazon Fire TV apps